The Hume OPC Supervisor Application

This document is best viewed from the Hume Datahub SDK documentation

which provides a hyperlinked table of contents document in the frame

immediately to the left of a larger frame where this document is

displayed.

(C) Copyright 2012, Hume Integration Software, All

Rights Reserved

This document may not be reproduced

or redistributed without prior

written

permission of Hume Integration Software. Licensed users of HIS

provided

software have permission to reproduce or electronically distribute this

document to support their usage of the HIS software.

Introduction

This document describes the configurable OPC Supervisor

Application

provided by Hume Integration Software as part of its Datahub SDK

product. OPC refers to a set of communication standards developed

by the non-profit OPC Foundation for the integration of computerized

devices such as sensors, instruments, and programmable logic

controllers (PLC's). OPC was originally

an acronym for OLE for Process Control. Over time the term OLE has been

deprecated in favor of the terms

COM and DCOM which refer to Microsoft's proprietary implementation of

inter-process communication using remote procedure calls (RPC).

The term COM is an acronym for Component Object Model, and the term

DCOM is an acronym for Distributed COM. DCOM implies using RPC

calls across a network for communication between computer

systems. DCOM can also be used for the modern case of virtualized

hosts running on a single computer where networking software is used to

communicate with the hosts running in virtual machines without external

hardware.

The OPC Foundation has created several families of standards. The

Data Access standards which use COM are by far the most widely

deployed. The Unified Architecture (OPC-UA) standards which do

not use COM and are therefore not limited to Windows systems are new so

there has not been sufficient time for their adoption. The Hume

OPC

Supervisor application provides the client-side logic of the popular

Data Access standards, and it enables the deployment of an application

with multiple simultaneously active connections to multiple OPC

servers. There have been three major versions of the Data Access

(DA) standards. The OPC Supervisor is designed to work with all

DA versions. Because of improvements in the standards, you will

experience much greater ease of using the application if you are able

to use Version 3 servers, or Version 2.05 servers that support the

optional browsing interface. This statement is true for all OPC

DA applications - not just the Hume application.

The usual mode of operation of an OPC DA server is to provide one or

more clients with periodic reports of changing data values. The

programming model is that the world is made up of named items,

which are organized into named groups. Each item has a type of

data associated with it for value reporting such as the double

precision floating point or 4 byte signed integer data types. An

item may support reading or writing its value in addition to the

streaming of data change reports. An item can belong to more than one

group. The Supervisor application supports your productivity

using OPC DA by discovering the items served, and their properties such

as read/write access, or analog range limits. The application

provides for manually configuring items when they cannot be discovered

programmatically. You are able to quickly define groups of items

for reporting and configure the desired group properties such as the

reporting period. Internally the application uses high

performance in-memory SQL tables to manage the configuration and the

collected data. The configuration includes your preferences for

automatic starting of OPC server connections and collection of

data. The configuration is saved to the file system where it

controls future startups. For example, a common scenario would be

to deploy the application as a Windows

service program that runs at system bootup.

Feature Summary

- A working, polished, and complete application for configuring and

managing OPC data collection.

- Connects to multiple Data Access Servers of any version.

- Leverages browsing and other introspection features of

OPC to avoid configuration work.

- Built-in monitoring of connection health and automatic recovery

on failure.

- Built-in connectivity to your custom SCADA and HMI applications

running on other computers or platforms using the DMH.

- Direct support for tunneling, aggregation or distribution of your

OPC data using the DMH.

- Integrates easily with the Hume SECS/GEM software or the Hume

Data Collection application component.

- Simplifies working with OPC by managing low-level handles and

type conversions automatically.

- Frees the developer from the low-level drudgery of working with

COM and explicit memory management. COM is a deadend technology

so why waste your time?

- Frees the developer from needing to code the configuration of

groups, items, update subscriptions, and the startup or shutdown

of data collection.

- Your applications only need to focus on processing collected data

and supervisory control.

DMH Message System Integration

The OPC Supervisor application enables you to configure

OPC data collection without writing code. In a typical scenario,

the application

performs the desired data collection on behalf of external processes

that connect to the OPC Supervisor using the Hume DMH message system. So the OPC

Supervisor is a re-usable building block that provides all the

configuration dialogs and logic necessary to use OPC, and the external

application processes are greatly simplified. The OPC Supervisor

configuration provides for optional startup as a DMH message system

server to enable this typical scenario. The OPC Supervisor

is implemented using a Tcl

programming language interpreter which has been extended by Hume

Integration Software with additional commands for automation

applications such as the DMH message system and SQL tables. The

Supervisor is able to receive and process Tcl language or SQL language

commands as it runs. This provides the means for external

processes to control the application using commands sent through the

message system without having to define and deploy a custom set of

commands. The very same procedures that the Supervisor uses can

be used by external applications even when those application are

written with different programming languages such as any of the

language choices supported by .NET.

The use of the DMH message system provides a straightforward and direct

means of distributing OPC data across your network. Tunneling

refers to communicating across a firewall. The DMH can perform

tunneling using a single TCP/IP port of your own choice.

Aggregation refers to combining the data from multiple servers to a

common destination such as a database. The OPC Supervisor is

easily used to aggregate data feeds. First, it directly supports

connecting to multiple servers from a single Supervisor process.

Second, it can be deployed in multiple instances across multiple

computer systems. Then you can use a DMH message system client

library such as the .NET

Component and make connections to multiple OPC Supervisor

applications from a single .NET process. Using the DMH message

system is one possibility for data aggregation and there are certainly

others. For example, there are various ways to tie into a

common database and direct support for ODBC

database connections is a feature of the Tcl language used by the

Supervisor.

The OPC Supervisor is able to easily serve multiple DMH clients

simultaneously. So instead of aggregating data it also possible

to disperse and distribute it for various purposes such as status

viewing, SPC analysis, logging, and historical trending. OPC

client software is capable of both receiving and writing data. So

it is possible to bridge distributed OPC servers by piping the data

through the DMH message system combined with reading and writing data

at the endpoints using separate OPC connections. The

Supervisor uses general purpose SQL tables that have subscription

features for its implementation backbone instead of

hard coded data structures. This provides needed flexibility for

applications to grow and evolve to meet changing requirements.

With the integration of subscription notification and a high level

deployment language the Supervisor is wicked smart as far as conserving

network and processing resources. Example subscription

applications are included that coalesce the many item changes of an OPC

group update into a single DMH message for sending to a subscription

client.

When DCOM was first developed, it was an attractive proposition because

it offered the simplicity of a distributed deployment that was

identical to a

single system COM deployment. It is now apparent that DCOM lacks

the configuration and diagnostic features needed to properly manage

network

performance and security issues. Tedious security configuration

has to be performed at each networked computer because of the

widespread existence of malicious software. The DMH message

system is much easier to deploy and manage as a replacement for using

DCOM to bridge computer systems. It is capable of higher

performance without the complexity of multiple threads since the sender

of an asynchronous message is not blocked until a DCOM RPC method call

returns. The DMH also provides queuing which results in stable

operation when there are bursts of data production activity or

occasional pauses of data consumption.

When the OPC Supervisor process is started as the server or client of a

DMH

message

group, the following mailboxes are used:

- SQL commands are accepted in mailbox SERVER_SQL for

remote

table data access.

- Tcl language commands are accepted in mailbox SERVER_RPC

for remote control interaction. The mailbox command processing

can also be used by the remote introspection debugger, inspect.

- Subscription messages such as notification of OPC data changes

can be sent

to mailbox destinations, and specific procedures have been developed

for optimized use of this feature. There is also

generic support to subscribe to changes in SQL table data and to

distribute or replicate these changes as they occur. The

subscription mechanism provides an elegant interface to collected OPC

data such that the

integrator does not need to change the supervisor logic that acquires

the data in order to use it in new ways.

Hume

Integration provides libraries to use the DMH message

system

from various programming environments such as .NET, POSIX C/C++, Visual

Basic, Visual C++, Java, or Tcl/Tk for both Windows and POSIX computer

systems.

Installation

There are two options for installing the OPC software depending on

whether development using Tcl programming is desired.

If Tcl programming does not need to be supported, then, a single large

executable file, HumeSDK.exe,

can be used instead of installing the Hume Datahub SDK. The

program can be placed in any convenient directory. The licensing

file, licenses.txt, should be

placed in the same directory. The program will create and use a

subdirectory, data, for saved

configuration

information by default. You can also create and set the

environment variable HUME_OPC_DATA_DIR

to a pathname using Unix/style/slash separators to have the program use

a different directory. The usual scenario is that there is some

application development using a different platform such as .NET or

Java, running on any computer - not just the system that has the OPC

software installed. Each DMH client library has its own

documentation. Follow the instructions for installing the DMH

client software libraries for your chosen platform on the development

computer. The .NET SecsPort library has methods to start and use

the OPC software, and to notify the application of OPC data changes

using events.

When Tcl programming is to be supported, the OPC application files are

installed using the

SETUP

program that comes with the Datahub SDK. Installing the OPC

Software is a choice from the SETUP program - make sure that the

relevant checkbox is checked.

The SETUP program

unpacks

the opc.zip archive into a set of directories underneath

your chosen installation path. The default base of the Windows

installation is c:\usr\local. A Windows

program

item is created to invoke the OPC

Supervisor from the Start Menu as part of the Hume Datahub SDK program

item group. The SETUP program does not create any registry

entries for OPC - none are needed to deploy the client side of

OPC. Here are the directories

that

are created, and their contents:

| Directory |

Description |

...\lib\humeopc1.0

...\lib\humeopc1.0\data

|

This directory contains Tcl source code files and the

humeopc.dll file that implement OPC applications and features. If

you do not choose to install OPC Software from the SETUP program this

directory and its contents are not installed.

The source code files in this directory are a resource for a Tcl

developer who wishes to understand in detail how the application works.

By default, a subdirectory, data,

is

created and used for saved configuration information. The program

also writes a startup.log file in this directory if it exists to help

with debugging the program startup. Startup can be tricky with

service programs because they may not use the customary environment or

user credentials. A different directory is used by setting the

environment variable HUME_OPC_DATA_DIR

before starting the program.

The OPC Supervisor application starts running by executing the Tcl code

package require opcclient.

This statement is the essence of the opcclient.bat

program. This one statement can be executed to combine running

the OPC application with another Tcl/Tk application such as the Hume

SECS/GEM Host Supervisor. The package require statement leverages

the package facility of Tcl which provides a means of deploying

versioned application components without explicit pathname dependencies.

The directory also contains some example .bat files that are written in

Tcl code and are executable using the Tcl/Tk. The examples

demonstrate how to subscribe to OPC data collected by the Supervisor

from another process. The bat files include:

sub2group.bat - a

practical example of subscribing to the data of one group.

subscriber.bat - a

practical example of subscribing to all of the data from one OPC

connection.

hubclone.bat - an example

of subscribing to all the SQL table data and all the table data changes

of a process.

|

...\html85

|

This directory is the root of

the HTML documentation for the Datahub SDK.

|

...\bin

|

This directory has executable

files for the Datahub SDK such as dmh_wish.exe,

inspect.exe, datahub85.bat, and hub85.

|

...\lib\dde1.3

...\lib\reg1.2

...\lib\tcl8.5

...\lib\tcl8

...\lib\tk8.5

|

The Datahub SDK installs and uses the open source platform

Tcl/Tk. These directories contain support code for Tcl and

Tk. The Tk widget demonstration program is

...\lib\tk8.5\demos\widget.

|

| ...\lib\dmh85 |

This directory holds Hume developed software that provides

the dmh package which

implements

Datahub features such as the DMH message system and SQL. The licenses.txt licensing file is found

in this directory.

|

OPC Server Installation

The Hume OPC Supervisor application performs the client role of the OPC

protocols. The server role is performed by interfaces to PLC's

and sensor devices. The user of the OPC Supervisor must have

access to an OPC server to make use of the Supervisor

application. Installation of a server is more involved than

installation of client software. It is the role of the server

provider to furnish the server enumerator program, opcenum.exe, that is

a redistributable product of the OPC Foundation. Further, the

server provider is responsible for making registry entries that are

used to enumerate or launch the server program.

During learning and development, it is useful to have a server

installed that is not connected with any manufacturing or production

activity. Currently Hume does not provide an example OPC server

with the

Datahub SDK installation. However, we are able to provide

assistance upon request.

If the target server is to run on the same computer as the Supervisor

application then no special security configuration needs to be

performed. If the target server is to run on a different

computer, virtual or physical, then explicit configuration of

permissions must be done for all computers involved.

Instructions on how to perform this task may be obtained from the OPC

Foundation website as the download entitled Using OPC via DCOM with

Windows XP Service Pack 2 in the White Papers section.

The OPC Standards

The OPC Foundation has developed the following standards upon which

this software is based.

- OPC Common Definitions and Interfaces, Version 1.1, December 2002.

- Data Access Custom Interface Standard, Version 3.0, March 2003

- Data Access Custom Interface Standard, Version 2.05, December

2001.

- OPC Complex Data Specification, Version 1.0, December 2003

The standards are available to OPC Foundation members through http://opcfoundation.org.

Hume Integration Software is a Company member of the OPC

Foundation. We are authorized to access the standards information

and support our customers in the course of selling and supporting our

OPC compliant products.

The OPC Supervisor Application User Guide

The OPC Supervisor is an example of using the Hume Datahub Application

and tailoring it for a specific purpose where the features that support

automation applications are particularly advantageous. The

Datahub is an in-memory, low-latency SQL database, a

subscription

server, a configurable Tcl/Tk interpreter, and a Distributed Message

Hub

(DMH) server. These capabilities combine with powerful synergy as

testified by the commercial success of the Datahub SDK in Semiconductor

manufacturing since its inception in 1995.

You interact with the Supervisor application to configure connections

to OPC servers and the desired data collection to be performed with

each connection. Data reports from the servers are received and

handled by the Supervisor logic. Table records for the involved

Items and Groups are updated as a result of each report

notification. Because the Supervisor also functions as a

subscription server, the subscription notifications containing selected

data changes are forwarded to external applications, or used locally to

activate custom application logic. In a nutshell these

applications are freed from understanding the details of COM and OPC,

and they do not need to replicate any of the configuration

features. The applications can also be run on non-Windows systems

or future versions of Windows that do not support COM.

A Windows Start Menu item for the OPC Supervisor is created in the

"Hume Datahub SDK 8.5" program item group when the Datahub SDK is

installed. In addition to the Start Menu item, the program

may be

started from a command line by executing:

dmh_wish -tclargs "package require opcclient"

It is also a property of dmh_wish

that when it is renamed, it performs a package

require statement for its new name. So as a one-time task you

can copy dmh_wish.exe to opcclient.exe, and then execute opcclient.exe from your desired

application directory whenever you wish to run the program.

If you have installed the HumeSDK.exe

program instead of the Datahub SDK, then you may start the OPC

supervisor program using the command line argument -opcclient:

HumeSDK

-opcclient

By default, the OPC Supervisor creates and uses a sub-directory of the

working directory named, data,

to save and restore configuration data. A different directory can

be specified by defining an environment variable, HUME_OPC_DATA_DIR, and setting its

value to the desired directory pathname using a Unix style slash

delimited directory path. When the environment variable is used,

the value is set before executing the OPC Supervisor application.

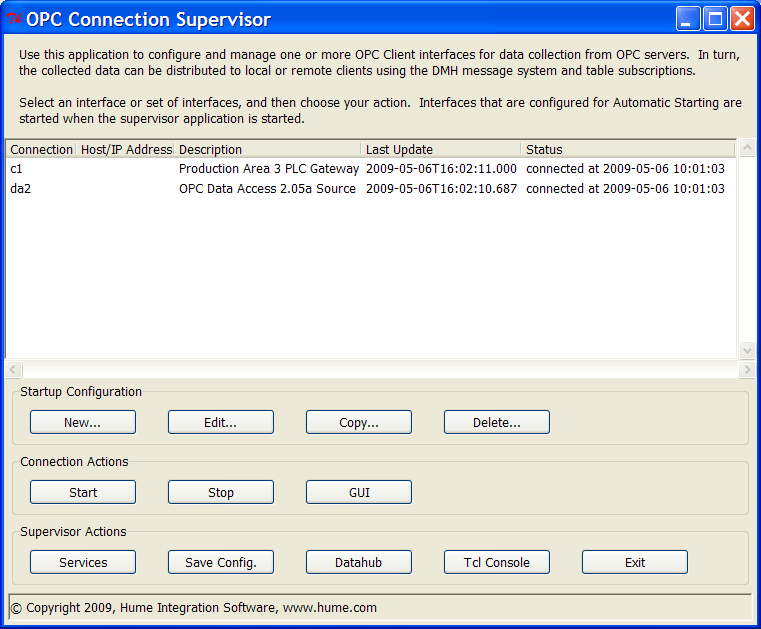

Supervisor Main Window

When the Supervisor application is started, the following window is

shown:

There is a list of your configured connections in the table display,

and three rows of action buttons. In general, the application

uses the object-action style - you select one or more objects from a

displayed list using the mouse, and press an action button to act upon

the selection. The shift and ctrl keys can be used with the mouse

to extend a selection or edit the selected set.

Another important general concept is that you are allowed to make

configuration changes that are not saved to the file system unless you

explicitly use the Save Config.

action or Save action

buttons. So you can create temporary connections to servers, and

experiment without creating or changing a configuration that is used at

startup. When you have unsaved configuration changes, you see a

status message at the bottom of this window, and you are reminded of

your unsaved changes when you initiate closing this window or you Exit

the application.

Similarly, there is a deliberate break between your configuration

and the actual state of a connection with the server. You can

perform configuration work without being online with the target

server. If you are online with a server and you make

editing changes, you are often provided with a checkbox on the editing

dialog so that you can explicitly specify whether changes should be

applied to the connection. There are action buttons such as Pause

or Resume that affect the current state of a group without changing the

configuration that is applied at startup. Group structural

changes such as creating groups, and adding or removing items are

synchronized with the server if you are online.

The list of Connections is dynamic - it updates to display the

time of the latest group data input as well as important status events

such as connecting or disconnecting. You usually will not see

automatic connection recovery events because they happen quickly.

You may notice that the "connected at" timestamp is more recent.

The first row of buttons in the frame labeled Startup Configuration have actions

related to editing connection configurations and the second row in the

frame labeled Connection Actions

has actions related to using a connection:

- New... is used to create

a new connection definition

- Edit... is used to

edit or change a connection definition

- Copy... is used to make a

"deep" copy of connection definition. The New... and Edit... actions show an editing

dialog that provides for configuring the server information and startup

behavior. The GUI button

in the next row shows a Graphical User Interface which provides for

configuring item, group, and memberships. The Copy... action

copies the entire configuration including the server properties and the

item and group configuration to a new name. The copied connection

is not started.

- Delete... is used

to delete one or more connection configurations after a confirmation

dialog.

- Start... and Stop... are used to connect to the

server or disconnect.

The last row of buttons in the frame labeled Supervisor Actions are:

- Services This

brings up a dialog for you to configure starting as a DMH message

system server. You can have more than one instance of the OPC

Supervisor running and acting as message system servers on a computer

as long as each instance is configured with a unique DMH group

name. The default DMH group name is OPC which is mapped to the

socket port of 5227.

- Save Config. This

action saves your current configuration as a set of table files in the data subdirectory. The table

files are loaded when you start future sessions. In a production

setting, the administrator should make backup copies of the table

data. An integrator may want to copy the table files into a

separate directory to preserve a configuration for backup or future

reference. The amount of table data, meaning the number of tables

and table

rows, does not increase as OPC data is collected. So there is no

ongoing table or file maintenance needed.

- Datahub This

action shows the Datahub table management GUI. This window is

mostly of use to a developer since the application windows provide for

configuring and displaying the application table data.

- Tcl Console This

action shows a command console which can be used for interactive Tcl

commands.

- Exit The Datahub

and Console windows have Exit actions which will not remind you of

unsaved changes like this one will.

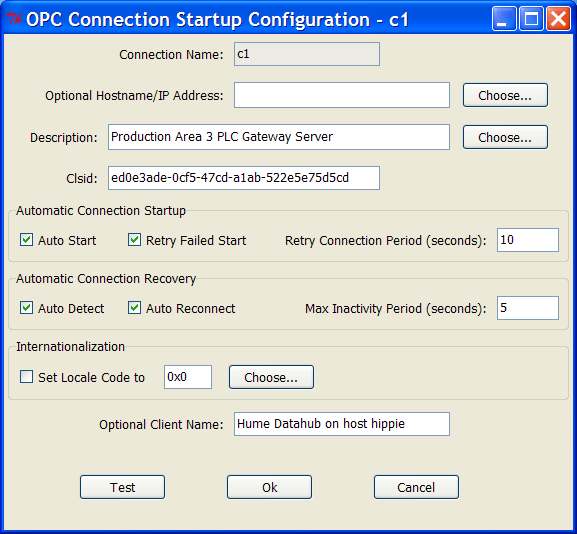

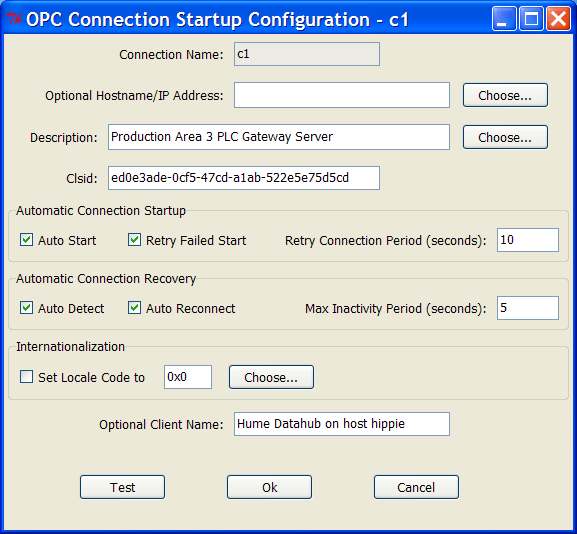

Connection Editor

Here is the Connection editing dialog that is show for the

New... or Edit... actions.

Leave the Hostname/IP Address

entryfield blank or set it to localhost

to target a server that is running on the same computer. The Choose... button displays a

selection list of the hosts in your Windows Network Neighborhood.

Set the Hostname or IP address before using the next Choose... button which is used to

select a server and set the Description

and Clsid entryfield

values. This Choose...

button uses the OPC Foundation server enumeration program on the

specified host. You are able to manually edit the Clsid if

enumeration is not possible. Also, the server description can be

edited as you wish.

The other Connection properties are as follows:

- Auto Start specifies

whether connecting and initializing data collection is desired when the

program is started.

- Retry Failed Start

specifies whether the program should retry connecting if a connection

attempt fails. The Retry

Connection Period entry specifies the retry interval period in

seconds.

- Auto Detect specifies

whether the application should perform smart polling to determine if

the connection is functional. The Max Inactivity Period specifies a

time period in seconds used for smart polling. If there has not

been group input received within the previous period, the logic

executes a method call to test the connection.

- Auto Reconnect is

relevant if Auto Detect is

true. If the smart polling determines that the connection is

broken, the Auto Reconnect

setting determines whether the logic attempts to reconnect. The Retry Failed Start and Retry Connection Period are also

applied to the reconnect attempt if necessary.

- Locale selection - the

checkbox, entryfield, and Choose...

button let you configure a choice of Locale from the list supported by

the server. When the connection is made, configuration choices

such as Locale are synchronized with the server.

- Test Pressing this button

tests whether you can connect to the configured server.

- Ok validates and applies

your edits to the in-memory Datahub tables. The changes are not

saved to the file system

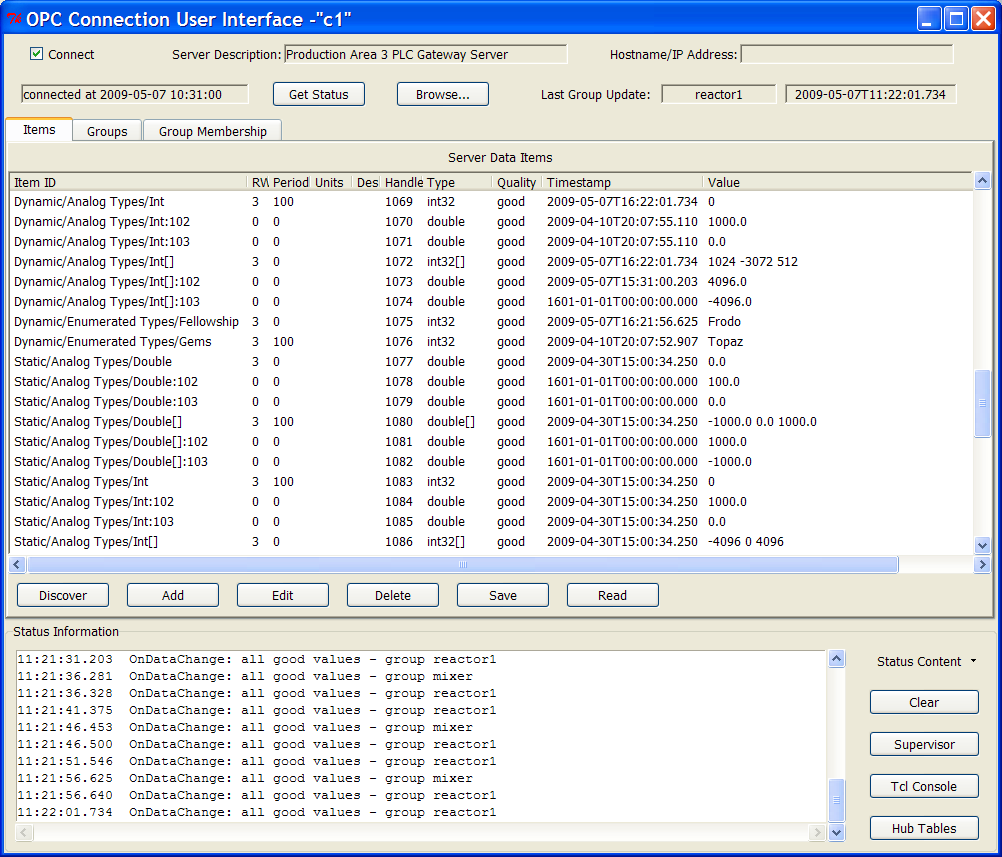

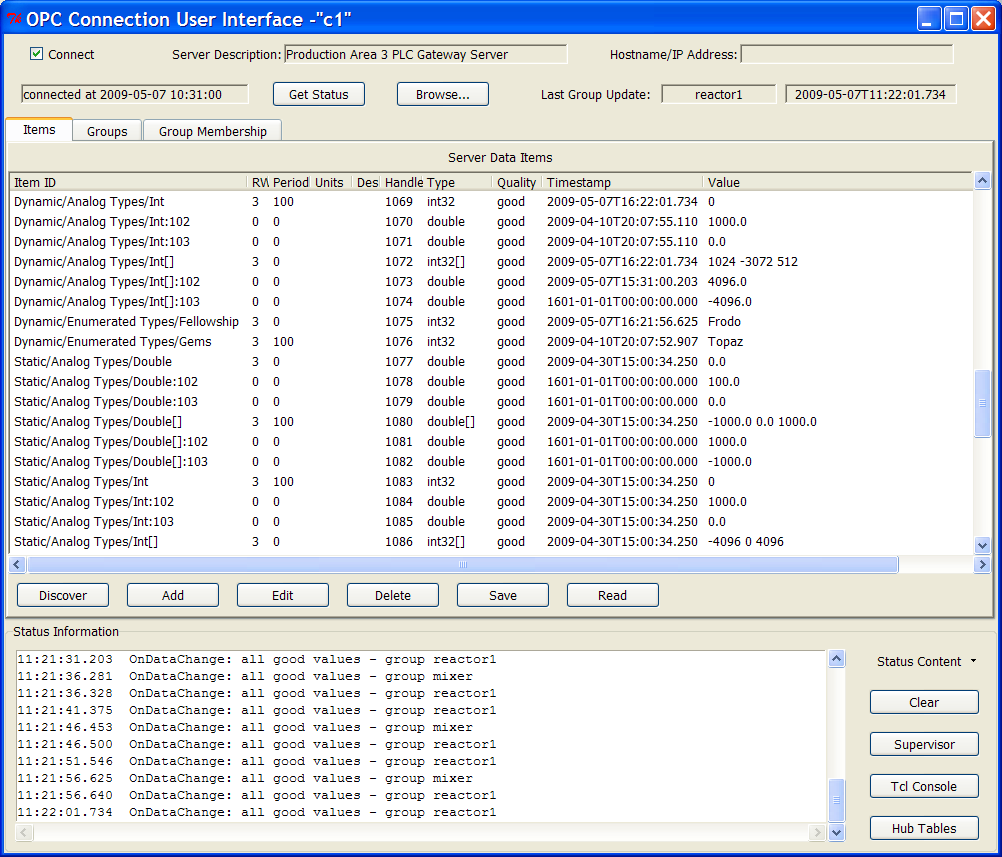

Connection User Interface

The GUI window is used to configure and manage the data collection

configuration of a connection. Here is an example:

The window is resizable and you can stretch it to display more data

lines. The central portion is a tabbed notebook area that is used

to display Items, Groups, and the association of Items

in Groups on the Group Membership

pane. The display is dynamic - item values, qualities and

timestamps are updated as new data is received. At the top

of the window, the Last Group Update

fields update too.

The bottom frame labeled Status

Information has a scrolling display that can be distracting if

updates are occuring too often. The Status Content widget on the

right side shows a menu that lets you control the displayed

content. You are able to have updates of items displayed if you

want to see more detail.

Notice the checkbox in the top left corner labeled Connect. Use this checkbox to

connect or disconnect from the server. It performs the same

actions as the Start and Stop buttons on the Supervisor

window.

At the top of the GUI window, the Get

Status button queries the server for status information and adds

the result to the scrolling Status Information display.

When you first connect to a server, the Items list is empty.

Press the Discover button

underneath the Items list. If your server supports browsing the

list becomes populated with the Items served. If your server does

not support browsing, then the Add

button can be used to manually create Item records. Before

performing a lot of manual editing, check this out. Press the Groups notebook tab and press the Discover button on the Groups pane

to see if the server has any pre-defined Groups. If it does, the

logic will discover the items that are known because they belong to a

group. You need to have some Items in order to use the program

features for data collection. The only essential property to

configure for an item is the fully qualified identifier, Item ID, and

the server connection name. If you have a list of the available

items in a plain text file, one per line, it is simple to

programmatically add them. You can cut and paste this code into

the Tcl console:

set connection c2

set filename "c:/tmp/itemlist.txt"

set fid [open $filename]

set data [read -nonewline $fid]

close $fid

foreach line [split $data \n] {

set stmt "insert into opc_item (ocname, item_id)

values ('$connection', '$line')"

set reply [SQL $stmt]

puts "$stmt \t $reply"

}

You may optionally configure other properties of items such as EU range

limits and a description for the use of your external

applications. The Supervisor does not need to know any

information beyond the Item ID.

The action buttons for Items are straightforward. Add, Edit, Delete, and Read act upon selected items.

The Save button saves all of

your data for all connections. It is deliberate that the

application does not feature a Write

button for interactive use. OPC is used for industrial

automation purposes and there can be risk with writing values that have

control implications. You are able to issue write commands from

your own applications, and in that context you can deploy appropriate

security, validation, and logging features. For example, an

integrator could use the Hume Data Collection Component (DCC) and use

the data collection group feature to implement writing to a control

item. The DCC provides login security and the act of data

collection would capture and log the action and its context.

The most efficient means of collecting data with OPC is to add Items to a Group and receive periodic reports

of data changes from the server. Press the Groups notebook tab to bring up the

Groups Pane. Use the Create

action to create a new group. The Clone action makes a new group by

copying and renaming an existing group. Once you start editing

you see the "You have Unsaved Changes!" message. Press the Save button to save all of your

configuration data.

Group Editor

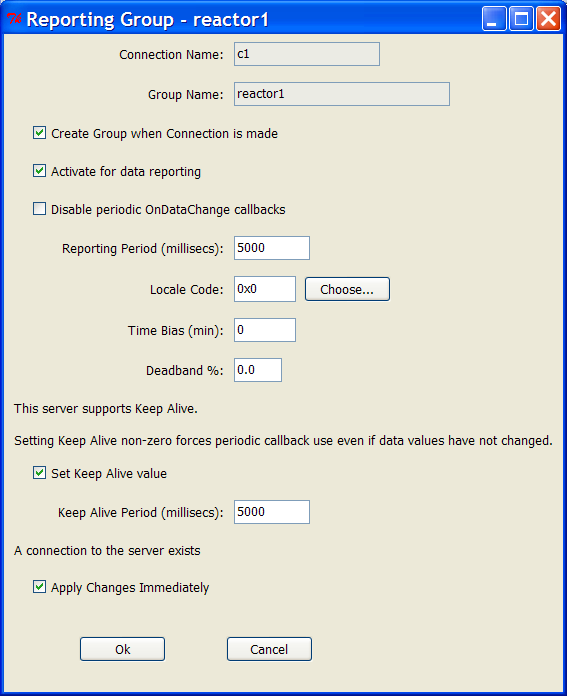

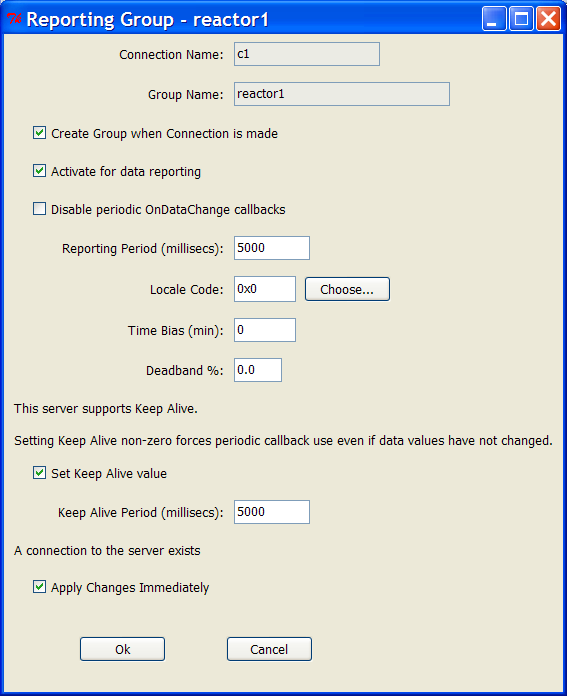

Here is the editing dialog that is used for the Create and Edit Group actions:

You may see fewer configuration features depending on what is supported

by your server and whether you are connected. Here are notes on

the features:

- Create Group when Connection is

made - In most cases you will want your configured Group to be

instantiated at the server when you connect, so usually this checkbox

is checked.

- Activate for data reporting

- OPC has a concept of a group being defined at the server but not

being active for data access. Make sure this button is checked to

have data reporting enabled.

- Disable periodic OnDataChange

callbacks. This checkbox applies for an active

Group. You may pause or resume the periodic OnDataChange callback

notifications using this checkbox. When the periodic callback is

disabled, you are still able to request a Refresh which causes the

server to execute the OnDataChange callback in response.

- Reporting Period -

specify a number of milliseconds for the periodic OnDataChange callback

notifications. The value of 0 instructs the server to use

the minimum supported period.

- Time Bias - The default

time offset is 0 minutes meaning reported time values are for the UTC

timezone. Use this

property to set a desired time offset in minutes for the group.

- Deadband Percent - A

deadband percentage value may be established for the group to eliminate

reporting

of insignificant data changes. The deadband value only applies to

Analog data items.

- Keep Alive - This

property is only supported for a Version 3 server.

If set to a non-zero value of milliseconds, the OnDataChange callback

is

called even if none of the group values have changed sufficiently to

warrant invoking the

OnDataChange callback. The feature eliminates the need for polling to

verify that the server is

functional. Setting the Keep Alive interval to the same as the

Reporting Period would be typical.

- Apply Changes Immediately

- Usually you are editing the group to define properties for the next

startup. There are other action buttons on the Group pane such as

Pause and Resume or Active Toggle that are for dynamic

change. When you are online, the dialog does support

editing the properties and immediately synchronizing the changes with

the server.

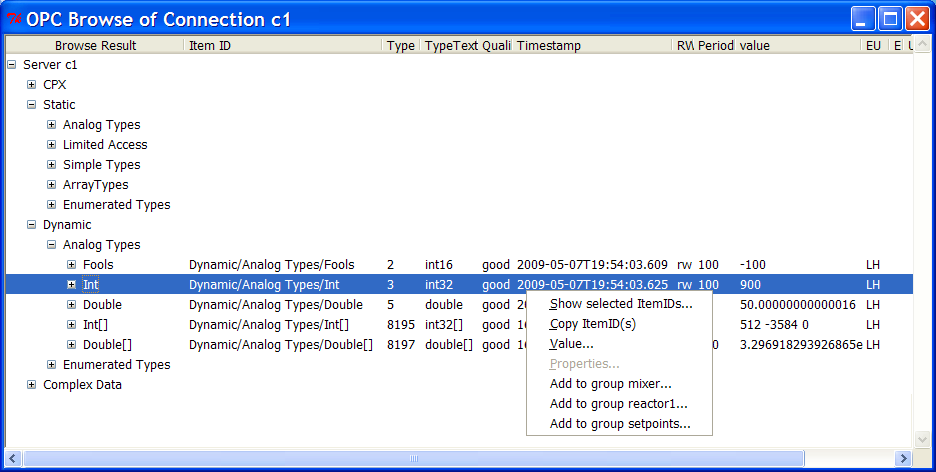

How do you add Items to a Group? Go back to the Items tab and select some items from

the list. Then click the right mouse button. You will be

presented with menu actions to add the selected items to each of your

Groups.

Browse Window

The browse window that is launched from the Browse... button in the top center

of the GUI features the same right click menu actions to add selected

items to Groups as the Items display. The hierarchical tree

display in the browse window has extremely powerful selection

features. In addition to shift click and ctrl click actions

to extend and revise the selection, all of the items that are below an

unexpanded branch of the tree are selected if the branch is

selected.

There are other right-click menu actions on the Version 3 browsing

window that warrant comment. The menu choices are enabled or

disabled depending on the presence or absence of items and additional

properties in the selection. The Show, Value, and Properties menu actions display

information in a new window. The Copy

menu action supports Copy and Paste of the selected Item IDs so you

need to paste the result to see anything.

Group Membership

The OPC standards provide some features to fine tune the behavior of

individual items in a group. Use the Group Membership notebook tab to

bring up the display of item - group associations. By selecting a

row and pressing the Edit

action button, you are presented with a dialog that lets you configure

the membership properties. All server versions allow you to

control whether an Item is active or not for data reporting.

Version 3 servers enable you to set a deadband value for an analog Item

in a group that overrides the Group setting. Version 3 servers

may optionally implement the IOPCItemSamplingMgt interface which

enables you to (1) set a sampling period for an Item that overrides the

Group setting, and (2) enable or disable buffering for an Item which

provides for multiple value change notifications per callback report.

Application Integration and Using Collected Data

Up to this point we have covered the basics of connecting to OPC

servers and configuring the collection of data. When your

connections are started, and your active groups are defined and

configured with the servers, the OnDataChange callback begins to

receive data. As each OnDataChange callback is executed, the

Supervisor application updates the opc_item

table data to contain the latest timestamp, value, and quality

information for each item in the callback report. Then the group

record in the opc_group table is updated to

show the time of the latest callback, and lastly the connection record

in the opc_client table is updated to show

the latest group callback. Your applications make use of the

collected data by opening subscriptions to these tables. The SQL

open command is used as described in the Datahub

documentation. When your application is a separate process,

you use the DMH message system to connect to the Supervisor application

and then you communicate commands to use the table subscription

features.

Example Applications

The Supervisor includes some example application code to demonstrate

connecting with the DMH and setting up a subscription feed. These

examples are short - it hardly takes any code to obtain the OPC data in

a custom application using a high-level language. The examples

assume that that OPC Supervisor is running and that it has been

initialized to act as a DMH server.

The subscriber.bat application

script is an executable batch file written in Tcl that provides an

example of subscribing to all the data being collected from a

particular server connection. There are two setup arguments that

can be passed on the command line; (1) the hostname:DmhGroupname of the

OPC Supervisor program such as localhost:OPC, and (2) the OPC

connection name, which defaults to c1. You can run multiple

instances of this application script from different computer systems

where you have installed the Hume Datahub SDK. You are

encouraged to examine the commented source code in the .bat file and

use it as a model to start your own application. The example also

applies to programming languages other than Tcl because you can use

equivalent DMH message system commands from other languages to setup

and use the same subscriptions.

A similar example is the Tcl application script, sub2group.bat. This application

provides an example of subscribing to the data collected for a single

Group. You can pass the [hostname:]DmhGroupname of the OPC

Supervisor process as a command line argument and the application will

prompt you to choose a connection and group.

OPC Subscription Procedures

The application examples use Tcl procedures to manage table

subscriptions that have been optimized for OPC usage. OPC client

software can potentially collect large volumes of data and consume lots

of network bandwidth. If Datahub table subscriptions are opened

for sending messages to remote users, and the users are disconnected,

there needs to be cleanup logic to shut these subscriptions down.

The subscriptions also aggregate the data changes of a group that occur

in a burst, and send these in a single DMH message instead of sending

one message per table row change.

The Tcl procedures and their calling arguments are:

opc_subscribe_connection

clientID destbox ocname

{max_messages 100}

opc_subscribe_group clientID destbox

ocname groupname {max_messages

100}

opc_subscribe_close clientID destbox

These procedures are executed from any application using any

programming language by sending a plain text command message to the

SERVER_RPC mailbox of the OPC Supervisor.

The opc_subscribe_connection

procedure opens a subscription for all the data collected by a

particular OPC connection.

The opc_subscribe_group

procedure opens a subscription for data that is collected for a single

Group.

The opc_subscribe_close

procedure is called to close either subscription type.

The procedure arguments are:

- clientID

- a unique value

to distinguish the subscribing process for any other DMH client process

including the same application being executed multiple times. A

common value to use is the clientID value obtained from the DMH message

system library such as the .NET DmhClient ClientID property value.

- destbox

- the

destination DMH mailbox for subscription notification messages.

This is the name of a mailbox that the subscribing application uses to

receive DMH messages.

- ocname

- the connection

name to an OPC server as configured using the OPC Supervisor

application.

- groupname

- the name of

the Group of items as configured using the OPC Supervisor application.

- max_messages

- an

optionally provided argument which specifies the maximum number of DMH

messages that may be waiting for the subscriber to read. If the

safe subscription monitoring logic sees more than this number of

pending messages in the mailbox queue, it closes the subscription and

flushes and closes the mailbox.

The subscriptions provide notifications of data in a list format that

is

compatible with the Tcl

Reply Format that is used by the Datahub for selection results and

for subscription notifications that are not SQL. In order

to provide schema information, the subscriptions send a new

notification type, create,

when the subscription is first opened containing schema information and

a create statement for the opc_item

table. The next messages sent are selection results from the opc_item table for all of the data

items which belong to the subscribed to group(s). Then as

time proceeds update notifications for the items in the opc_item table are sent when

triggered by updates to the opc_group

table.

In order to combine many notifications in one DMH message, the

subscriptions (1) combine similar notifications that occur in sequence

to a single notification for multiple rows of data, and (2) the message

is formatted as a list of Tcl Reply Format notifications instead of a

single notification. Here is the detail on the new create

notification format. The ordinary Tcl Reply Format is a list of 7

elements:

notification_type

tablename columns key_columns row_count error_info data_rows

The create type notification is formatted as a list of 8 elements:

create opc_item columns key_columns 0 {} {} SQL_create_statement

The Tcl example applications demonstrate easily parsing the

subscription messages using the Tcl vset

and foreach commands. A .NET

application uses the ListSplit

and ListElement methods of the

.NET DMH client library.

Taking Control and Writing Data

The command button actions that the Supervisor application supports

and other actions such as writing data are accessible to an external

client application that is connected through the DMH message

system. Any Tcl programming statements such as procedure

invocations can be sent as messages to the SERVER_RPC mailbox. The

Supervisor application executes each received message and optionally

sends a reply message with the result of executing the

command. You may also use the Tcl SQL command described in

the Datahub document to query or change any table data.

Each OPC connection name is a Tcl command that can be used directly to

access any OPC Data Access capabilities. The details of the

command syntax are presented in the opc

command documentation. When formatting text commands,

no special precautions are needed with numeric data and timestamp

values. When writing string values, it may be the case that the

string data contains special characters such as quotation marks or

braces that can be used to delimit list elements. If this is

possible, it is safest to use methods that have been provided by Hume

for creating lists such as the .NET library ListAppend or ListJoin

methods.

The Application Source Code

The Tcl source code of the OPC Supervisor application is provided

for your understanding and possible customization.

The Supervisor application dynamically loads a DLL that

extends the

Datahub process with new commands for using COM and OPC. The Hume

developed Tcl OPC commands

are documented separately because they can be used independently by

any application. Similarly the Datahub Reference document

is useful to understand detailed use of the SQL table management

commands including the subscription features of the SQL open

command. If you are new to Tcl, the syntax of the language is

summarized in .../mann/Tcl.html.

Why are we using Tcl instead of a system programming language such

as Java or C#? A scripting language like Tcl is far more

productive for this kind of work. You may enjoy reading the IEEE

Computer magazine article, Scripting: Higher

Level Programming for the 21st Century, by John Ousterhout the

creator of Tcl.

SECS/GEM Equipment Interface Integration

In this section we discuss how to combine the use of OPC connections

with deploying an equipment SECS/GEM inteface. Hume Integration

Software is a leading provider of cross-platform SECS/GEM software with

many installations worldwide.

Here are the requirements of SECS/GEM compliance summarized plainly:

- Front Panel Requirements

- The tool user interface provides a control to enable or disable SECS

communication. There is a text field to display the current SECS

communication state. Another control is provided to enable or

disable online control. If remote commands are supported, or if

there are host settable parameters that affect processing, then there

is a control to choose between LOCAL or REMOTE control modes during

online control. A display of the current control state is not

mandated but it is highly desirable. If terminal display

messages are supported, then there is an indicator for when a new

message has been received from the host and there is a button for the

operator to acknowledge the host display. Terminal display

messages are

not used much and may be skipped in a minimal deployment. Your

tool may not have a physical operator console with a conventional user

interface. In this situation, apply the Front Panel Requirements

to the main status screen that you provide to networked clients.

If you do not provide any kind of status screen, you are missing a

strong opportunity to improve the competitive position of your

equipment, and you are probably losing prospective

customers. Hume Integration can show you how to quickly

deploy a small footprint web server with a suitable status

screen. This can give your marketing people something real

to demonstrate; they can even show a status screen on their cell phone.

- Startup Configuration -

GEM specifies that the connection properties, timer values,

communication enablement, and control state startup are all enduser

configurable and saved in persistent storage. Many tools provide

a configuration dialog based on our example applications. A

faster deployment is possible for an OPC based tool by relying on the

configuration dialog and persistence features provided by the Hume SECS

Server.

- Process State Model - Any

batch tool has a process state model and GEM requires you to document

it and to post data collection events on process state

transitions. Our example applications feature a simple model

(INIT, IDLE, SETUP, EXECUTING, PAUSED), with the standard events being

posted.

- Variables - If

your tool has measured values such as temperatures, pressures, and

counters, these

values should be served on the SECS/GEM interface as Status

Variables. Other important status or descriptive

items should also be served as Status Variables. If your tool

uses

process programs then the name of the currently selected program is

served as variable PPExecName, and the name of the last used program

(which may still be selected) is served as variable

PPUsedName. If you have a process state model, then you

will support the ProcessState and PreviousProcessState built-in

variables.

- Parameters - Read/Write

variables which are used to configure various aspects of your tool's

behavior or processing are served on the SECS/GEM interface as ECV

parameters. Divide your parameters into two sets: (1)

values that affect current processing so the host is not allowed to

change them in LOCAL control, and (2) other parameters.

- Alarms - Alarms are an

undesirable state with both a set and clear condition. Each type

of an alarm condition is defined as a SECS alarm type and the set and

clear transitions are communicated on the SECS interface.

- Events - An event is a

notable occurrence such as the completion of a process step. Your

tool should post event occurrences on the SECS interface, which are

used as triggers to send the host dynamically defined data collection

reports containing Status Variable values.

- Standard Documentation -

SECS and GEM have strict enduser documentation requirements.

SEMI Standard PV2 for Photovoltaic equipment also adds to the

documentation requirements. Hume provides template enduser

documentation to our customers for their modification and

redistribution.

- Acceptance - SECS and GEM

have

many complexities including the specification of many message types

that are rarely used and pitfalls for naive implementations. To

insure your interface works well and is accepted by host users, focus

on the essential requirements listed above and use a validated toolset

to fill-in the low level implementation details and behavior

models. There are many other requirements of SECS and GEM such as

implementing the communication and control state models. These

requirements

are not listed because they are already provided by the Hume toolset.

Note that the Front Panel Requirements

mandate specific features on your tool user interface. We have

seen brochures for SECS/GEM OPC integration products that claim to

eliminate the need to write any code. They must envision that you

are able to modify your user interface with new IO features and that

this programming work does not count as code. Let us

advance a contrasting counter-claim. We have created procedures

that map OPC data items to SECS items and vice versa which makes it

as simple as it gets to integrate a SECS interface with OPC

servers. You are able deploy a SECS interface without writing new

procedures, without coding to send and receive any SECS messages, and

without

visiting dozens and dozens of configuration screens. Just edit an

integration file to contain your own OPC names instead of our example

ones. Then make a small number of changes to your tool user

interface to comply with the Front Panel Requirements. If you

have custom requirements you will not be hemmed in by the lack of

flexibility of a configuration-only product. We respect your

skills as a software developer, and we will not insult you with a claim

that sounds good to a marketing guy who doesn't know how to write code.

OPC - SECS Equipment Interface Deployment

Scenarios

There are different usage scenarios supported by this software.

Let's show them and assign shorthand names for ease of reference.

Scenario Eq1

SECS DMH

[ Host ] <-----> [Eq SECS Server, OPC Super] <-----> [OEM SCADA Controller]

\ OPC

--------------< [OEM OPC Server(s)]

With scenario Eq1, the Hume OPC Supervisor/SECS server process is used

to directly serve selected OPC items as SECS Variables,

Parameters, Alarms, and Events. If there are no Parameters

directly mapped to OPC items, then the flow of OPC data is one-way from

the OPC server(s) to the SECS interface. The OPC integration is

supplementing the use of one of the Hume SECS/GEM libraries such as the

.NET SecsPort or the SecsEquip Active-X control.

The flow of SECS status information such as the communication state is

to the SCADA controller using the SECS/GEM library. In this

scenario, there is no need to configure an OPC server to have new items

that are written to by the SECS Server. The SCADA controller has

full control over the SECS interface using features of the SECS/GEM

library and the library also provides callbacks to receive status

information.

Scenario Eq2

SECS OPC

[ Host ] <-----> [Eq SECS Server, OPC Super] <------> [OEM OPC Server(s)]

With scenario Eq2, all of the integration of the SECS interface is done

using OPC, and the Hume software only uses the client side of

OPC. This scenario depicts using products such as Wonderware's In

Touch HMI or Think & Do from Phoenix Contact which are able to

perform the OPC server role. As in

scenario Eq1, it is straightforward to directly serve OPC data items as

SECS Variables, Parameters, Alarms, and Events. An OEM OPC server

needs to have status items which the SECS software writes to in order

to meet the SECS front panel requirements. SECS is a more complex

protocol than OPC and it is less straightforward to map more advanced

SECS features such as Remote Commands to OPC. When more complex

functions are needed, one deployment option is to add application code

which runs in the Hume SECS Server process. The application code

can read and write OPC data items as well as interact fully with the

SECS interface. This deployment option is less complex than

trying to handle the SECS transaction logic in the OPC

application. The SECS Server has commands to build and parse the

message data structures of SECS which provides you with easy conversion

to and from the ordinary data items of OPC. Potential problems

with timing and synchronization are also reduced by using custom code

that runs in the SECS Server. However, if you want to initiate or

handle SECS conversations in the OPC application, you can do it.

A standard SECS interface that only needs to serve Variables,

Parameters, Alarms, and Events does not need any coding of SECS message

conversations. Also, Hume provides example code and integration

of the common Remote Commands such as START, STOP, PAUSE, RESUME, ABORT

and PP-SELECT so the OEM needs only to deploy the command logic in his

tool controller.

Scenario Eq3

SECS OPC

[ Host ] <-----> [Eq SECS Server ] <------> [OEM OPC Client SCADA Controller]

[OPC Server & Client] |

| OPC |

\----------------------< [OEM OPC Server(s)]

Scenario Eq3 is similar to Eq2 except we assume that the OEM's SCADA

controller can only be integrated using OPC client side logic, and

further, there is no ability to add items to any OEM OPC server for the

writing of SECS status information. In this scenario, the Hume

software provides an OPC server with items that are defined for SECS

integration. The Hume SECS server process can also use OPC client

side logic to directly serve OPC items as SECS Variables, Parameters,

Alarms, and Events if there are OPC servers being used for process data

collection. Does this scenario fit your situation? If so,

please tell us. We are thinking this more complex scenario is not

common, and we have not released an OPC server product.

SECS Integration Procedures

The Hume Datahub SDK includes code for integrating OPC with an

equipment SECS interface. If you are not using the .NET SecsPort

library, the file opc_secs_init.tcl

in the

<install_base>\gem2\equip directory has Tcl code that should be

modified for the custom features of your equipment. The typical

equipment OEM will only need to edit in item names for his equipment

type. There are instructions in this code file for running

the OPC Supervisor without running the SECS interface in order to

configure OPC groups. There are also instructions to run

the gemsim program script with the OPC integration enabled. You

run the SECS and OPC software together once you have your groups and

item names configured. The optional command line argument -notk causes the gemsim program to

run without showing any windows.

If you are using the .NET SecsPort

component library in combination with these OPC features, then you can

ignore editing the opc_secs_init.tcl

file. We have expanded the SecsPort library to include methods

for integrating OPC data items with your SECS interface(s) instead of

editing this file.

The main procedure in this file, is opc_secs_init.

You edit the body of this procedure to specify the OPC item_id values

from your server(s) that are mapped to SECS Alarms, Events, Parameters,

and Variables. The procedures that are used to perform the item

mapping are documented below by function.

Alarms

Changes to some OPC item values may signify SECS alarms. An alarm

is an undesirable condition with a set and clear state. In the

usual case, you assign ALID integer identifiers to each alarm type

starting from 1000. As you add alarm definitions, the SECS

software assigns Events for the Setting and Clearing transitions of the

alarm condition. These events will be signalled

automatically. There is a configuration value in the opc_secs_init procedure to specify

whether different Alarm types share the same Events which is the

default. In this case you may number your ALID values to

increase in sequence by 1. The method

opc_secs_alarm_add spname opc_item_id ALID ALTX {ocname {}} {has_min 1} {min 0} {has_max 1} {max 0}

is called to map the data of an OPC item to an alarm

condition. The argument spname

is the name of the SECS interface connection. The opc_item_id argument is the fully

qualified OPC item name. The ALID and ALTX arguments represent

the SECS data items for the alarm identifier and its description.

The description is limited to 120 characters. The OPC connection

name argument, ocname,

argument value is set to opc_spname if it is defaulted.

The arguments that have default values are shown as pairs with

surrounding braces. If a default value is shown, the argument is

optional and does not need to be supplied when the procedure is

called. For example, {has_min

1} means that the default value of the has_min is 1. The alarm

condition is set for the item if ($has_min && ($value <

$min)) || ($has_max && ($value > $max)). So the

default argument values map a non-zero value to the alarm set state

which works well for any representation of a boolean value. You

may use the optional arguments to specify a lower bound, an upper bound

or a range for the alarm set condition.

The procedure

opc_secs_alarm_init spname

is called once the alarms are defined. It creates a table

subscription that manages setting and clearing SECS alarms.

Our example code also calls

opc_secs_connect_alarm spname ocname ALID

The opc_secs_connect_alarm

procedure installs logic so that if the OPC connection ocname is ever lost, a SECS alarm

is indicated. You can test this alarm by using the OPC GUI to

disconnect and re-connect to the OPC Server. The integration

logic is able to recover automatically when the connection is

restored. In the few cases where transient server handle values

are used, new values are re-acquired.

Events

Changes to some OPC item values may signify Events. A good

example is a enumerated integer which represents the process state and

every value change is an Event. A batch tool is required to post

data collection Events on the transitions of its process state.

Another example is when a data item value is a counter of something

non-trivial.

The procedure opc_secs_event_add

is used to map OPC value changes to SECS Events.

opc_secs_event_add spname opc_item_id CEID description

{is_reported 1} {ocname {}} {criteria {}} {delay 0}

The event identifier, CEID,

is an integer value from 5000 to 9999. By default any value

change of the OPC item signals a SECS event. The optional criteria argument enables you to

specify an expression involving last_value

such as {$last_value > 0} to filter which value changes signify

events. There is also an optional argument, delay, which can be used to specify

a timed interval delay in milliseconds before the SECS event is

posted. The default delay value is 0 which causes the SECS event

to be posted immediately after the OPC group refresh which contained

the data update which triggered the event has been completely

processed. Additional delay can be used to allow for receiving

updated data values coming later or coming from different OPC group

refreshes.

You are able to specify a CEID value of one of the standard built-in SECS events

to indicate that the standard event is triggered by the OPC item value

change. You will likely specify the CEID value of 4050 for

the ProcessStateUpdate event and link it to your OPC process state

variable.

Once the events are configured, the procedure

opc_secs_event_init $spname

is called to turn on the value monitoring subscription for events.

Parameters

A Parameter is a read/write variable data item served on the SECS

interface such as a configuration choice or a setpoint. The

procedure used to configure OPC read/write items as SECS ECV Parameters

includes arguments for the minimum, maximum and default values of the

variable. A Parameter should be a scalar value and not an array

or list of values. The toolset has built-in logic to choose the

SECS value type based on the OPC canonical data type but you can

specify a choice using the value_TSN

argument. The calling signature of the add procedure is:

opc_secs_parameter_add spname opc_item_id varID description ecmin

ecmax ecdef {initial_value

{}}\

{affects_processing 1} {ocname {}} {opc_group Parameters} {varname {}} {value_TSN {}} {units {}}}

The default initial_value is

the current value of the item. If the affects_processing argument is set

to 1 then the host is prevented from changing the parameter value

except when the control state is in REMOTE control. The

default variable name, varname,

is the OPC item ID string.

The SECS standards specify that Parameter values set by the host are

persistent. The toolset has Parameter persistence features

built-in. Once the Parameters are configured, a procedure call is

made to restore any non-default values set by the host, and then the

opc_secs_parameter_init spname

procedure is called to initialize the value forwarding logic.

Properties (Configuration)

The SECS interface has various properties that control startup and

dynamic behavior such as whether SECS communication is enabled, and the

choice of the LOCAL or REMOTE control state when online. Some

properties reflect choices that are made at development time and are

not changed so there is no need to control them by OPC. For these

static properties, you edit your choices into the opc_secs_static_properties_init

procedure. Other properties may change from session to session or

may change while running. We provide integration logic so that

OPC items control these dynamic properties. You edit your mapping

of OPC item names into the opc_secs_dynamic_properties_init

procedure. You will need to have OPC items to control the

communication state and the control state in

order to comply with the Front Panel Requirements. Use the

existing code as your guide to which items are

needed. The

initialization of properties is performed by calling

opc_secs_properties_init spname ocname

For scenario Eq1, you use the property and configuration features of

your SECS/GEM code library and you do not need the call to opc_secs_properties_init.

For scenario Eq 2, a rapid deployment option is to let the SECS Server

provide an editing dialog and

persistence of the connection startup properties. This option is

documented in the opc_secs_init.tcl file. The initialization call

for connection properties is already coded in this file.

Status Information

The flow of status information from the SECS interface can be mapped to

writing various OPC items to support the Eq2 scenario. You need

to receive and display the current communication state to comply with

the Front Panel Requirements. Edit your OPC item names into the

procedure opc_secs_status_init.

You are able to receive display text of the data being exchanged on the

SECS interface if you configure trace related items.

Remote Commands

For deployment scenario Eq2, there are working example procedures in

the opc_secs_init.tcl file for

the common Remote Commands of GEM. The logic parses the command

and argument

data items from the SECS messages and write these to OPC data

items. Your tool logic needs to

read and respond to updated OPC remote command items and set an

appropriate return code value (HCACK). This

is less complex than re-creating SECS parsing and reply logic in your

OPC application.

For deployment scenario Eq1, you have SECS parsing and reply features

in your library API. Use these features to implement Remote

Commands and ignore the code that is provided for scenario Eq2.

Variables

An OPC item is easily mapped to a read-only Status Variable value on

the SECS interface. A SECS host is able to dynamically configure

data collection event reports and ask to receive certain Variable

values at the occurrence of specified Events. A variable value

can be an array. The mapping of the OPC data type to a

corresponding SECS data type is done automatically. An array of

string values or variant values is mapped to a SECS list of string

values.

Here is the calling signature of the add procedure:

opc_secs_variable_add spname opc_item_id varID description

{ocname {}} {varname {}} {add_quality 0} \

{value_mapping {}} {value_TSN {}} {units {}} {qual_varID 0} {qual_name {}} {qual_description {}}

The varID argument is an

integer identifier which is set to a unique value between 3000 and 9999

for new SECS variables. The procedure will optionally add the

quality property of the OPC item as a second SECS Variable. When

the quality is being added and the qual_varID

value is defaulted, the variable ID assigned to the quality variable is

(varID + 1). So if you

choose to serve quality values on the SECS interface, increment your varID value by 2 for each

successive add call. The default name of the quality Variable is "varname quality" and the default

description of the quality variable is "OPC quality bitfield for varname value".

The value_mapping argument

provides for supplying an expression to compute the value for the SECS

variable from the OPC value. For example, OPC uses enumerated

integer values starting from 0. SECS process state variables are

enumerated integer values that start from 64. Supplying the value_mapping argument of {expr

{$last_raw + 64}} maps the OPC value into the desired SECS

value. Use the variable last_raw

to access the OPC item value in your value_mapping

expression.

You are able to specify the varID value of a standard built-in variable

to specify that an OPC item supplies the value. A batch

processing tool that uses process programs must support the PPExecName

and PPUsedName Status Variables.

A batch processing tool also needs to support the SECS ProcessState and

PreviousProcessState Status Variables. Our example code is able

to monitor your OPC ProcessState variable and compute the

PreviousProcessState variable value for you. The code also posts

certain required additional SECS/GEM process state change events based

on the previous and current process states. These events are in

addition to the ProcessStateUpdate event which is posted for every

change of the ProcessState variable value. This example code is

initialized by calling the opc_secs_process_state_var

procedure which has this calling signature:

opc_secs_process_state_var

ocname item_id spname {currentStateVarId 810} {previousStateVarID 800}

Control

Your OPC application may want to shutdown the SECS Server/OPC

Supervisor process as part of its shutdown. Or, you may have

requirements where you will want to execute code in the SECS

Server. With scenario Eq1, the SECS library provides these

features.

With scenario Eq2, there are a handful of OPC data items, typically

boolean, that control features of the SECS Server. You can edit

the opc_secs_init.tcl file to substitute your own OPC item names:

- tracewinShow - Setting this item to 1 or 0 causes the SECS

Communication Trace window to be displayed or closed. This is an

important feature to make accessible from the tool. You will want

this feature to diagnose any communication problems in the field.

- tablewinShow - Setting this item to 1 or 0 causes the SECS Server

Data Table window to be displayed or hidden. This feature is only

used during development.

- exitFlag - If this item transitions to a non-zero value while the

SECS server is running, the SECS Server exits. The SECS

Server does not exit during startup if the value is already non-zero.

- configureShow - Setting this value to 1 or 0 causes the SECS

property configuration dialog to be displayed or closed. If your

tool provides this variable, the SECS server logic provides persistence

and editing of all of the SECS startup properties that are required to

be persistent such as the HSMS socket port, HSMS active or passive, the

SECS Device ID, timer values, the starting Control State, and whether

to start with SECS communication enabled. Including this variable

provides for the quickest deployment since you do not have to provide

an editing screen or provide persistence of SECS properties in the tool

controller software.

See the comments at the end of the opc_secs_init.tcl file for helpful

advice on starting up the SECS Server from the tool controller when

deploying scenario Eq2.

You may optionally deploy an advanced feature that provides complete

control over the SECS Server. An OPC string value item can be

configured so that

changes to its value are used as Tcl code to execute in the SECS Server

process. A Tcl

evaluation returns the integer return code 0 to indicate that the code

evaluated without error, and a string result. These can be

written to OPC items to provide result data. You cannot

execute the exact same command string twice in a row because the

evaluation logic only receives changed data values. One

solution is to append unique comment text to every command

invocation. However, you may face the same uniqueness issue when

receiving the reply result data. Instead of appending unique

comment text which will not be seen in the evaluation result, you may

want to tell the Tcl interpreter to return both a transaction ID and

the result as a list. For example if your SECS interface name is

gemsim and you wanted to send the SECS echo message repeatedly, your

code string values might be:

list 1 [gemsim put S2F25R {B 1 2 3}]

list 2 [gemsim put S2F25R {B 1 2 3}]

list 3 [gemsim put S2F25R {B 1 2 3}]

...

The built-in procedure to

provide an evaluation item is initialized by calling:

opc_secs_eval spname ocname groupname opc_code_item

opc_rc_item opc_result_item

The evaluation item is very general and powerful; for example, you can

issue commands to show and hide windows. The source code in the

file opc_secs_init.tcl has

comments to suggest a few commands.

If you are new to SECS, all of above can be a little confusing and

complex. Just keep a cool head and follow the example code.

Ask for

support help when you need it.

SQL Table Schema

The following in-memory, high performance datahub tables are used by

the OPC application.

The table data is loaded from the file system during the

application

startup. By default, a sub-directory, data,

of the program's working directory is used for saving the table

data. The directory used for data saving can also be specified by

setting an environment variable, HUME_OPC_DATA_DIR,

to the desired directory using a Unix style slash delimited pathname

such as c:/humeOPC/data. Each table has its own file, tablename.tab, containing SQL

statements for the table data. As the application runs, new rows

may be added, or rows

may be updated in the in-memory table data structures. By

default, there is no logic to save the table

data

to the file system before shutting down. Typically, if you make

configuration changes, you need to

explicitly press a save button on the user interface to

preserve

your changes for next time. You can also save the data tables

programmatically

by calling the opc_client_save

procedure.

Table Directory

Schema Notes

- Boolean Values

- Boolean values are usually stored as integers (also referred to

as

int).

In this convention, 0 indicates false and 1 is the preferred value of

true.

However it is usually the case that any non-zero value is acceptable as

true.

- Key column

- Under Key the abbreviation PK indicates that

the field

is

a primary key of the table.

- The abbreviation PCK indicates that the field is part

of a

primary

composite key for the table. In other words, the field and one or

more other fields, taken together, are the primary key for the

table.

There can not be multiple rows in a table that have the same value of

the

primary key.

opc_appstart

Application Startup Configuration

This table provides configuration records for the startup of the

application particularly for non-interactive use such as being run as a

Windows service.

At present, the defined fields provide for DMH message system

initialization. The table could easily include the startup of

other features such as HTTP service but the current design is to deploy